Talos Test Scenario and Results

Related Changes

| Commit | Revision | Change |

|---|---|---|

| c0fc9f8cac7d923d1a06a7235d21e54919d3d42a | D66598 | Increased priority queue support. |

| ee3d9d614fd36e5ea07460228c670f40e434dbf4 | D66823 | Amended the forwarding model so that each machine uses its own thread pool and Thrift client. |

| 5e068156aefda275c926b90c50f8df0cfebec7f9 | D67054 | Increased the expiration timestamp for read/write requests, immediately return them from the Talos queue when the time limit is surpassed. |

| 4cde032af7c9d3f698fc2072cf37dfa38adc2932 | D67338 | When RestServer is in DelayRemoved state, immediately return PartitionNotServe. |

Machine Environment

- 2 clients, 4 Talos machines, 4 Hdfs, 4 HBase, Talos and Hdfs/HBase distributed, machine models are all 2U;

- Read/write is synchronized, each partition corresponds to one thread;

Test Schema

There are two client machines, each client creates its own topic, each topic uses 1200 partitions. Each client starts 2400 threads, of these, 1200 threads simultaneously putMessage, the remaining 1200 threads simultaneously getMessage; for putMessage the size of each packet is 70K, in addition, there are some other fields that may cause the length to increase. Each thread transmits 2.1 data packets per second, therefore a single client end can simulate 2500 PutMessage requests per second; the rate for GetMessage requests is also 2500 per second, using the current c3srv-talos cluster's actual traffic. At the same time, when two client machines are activated and used to simulate doubled traffic, they replicate the state of the previous c3srv-talos cluster at the time of its system failure.

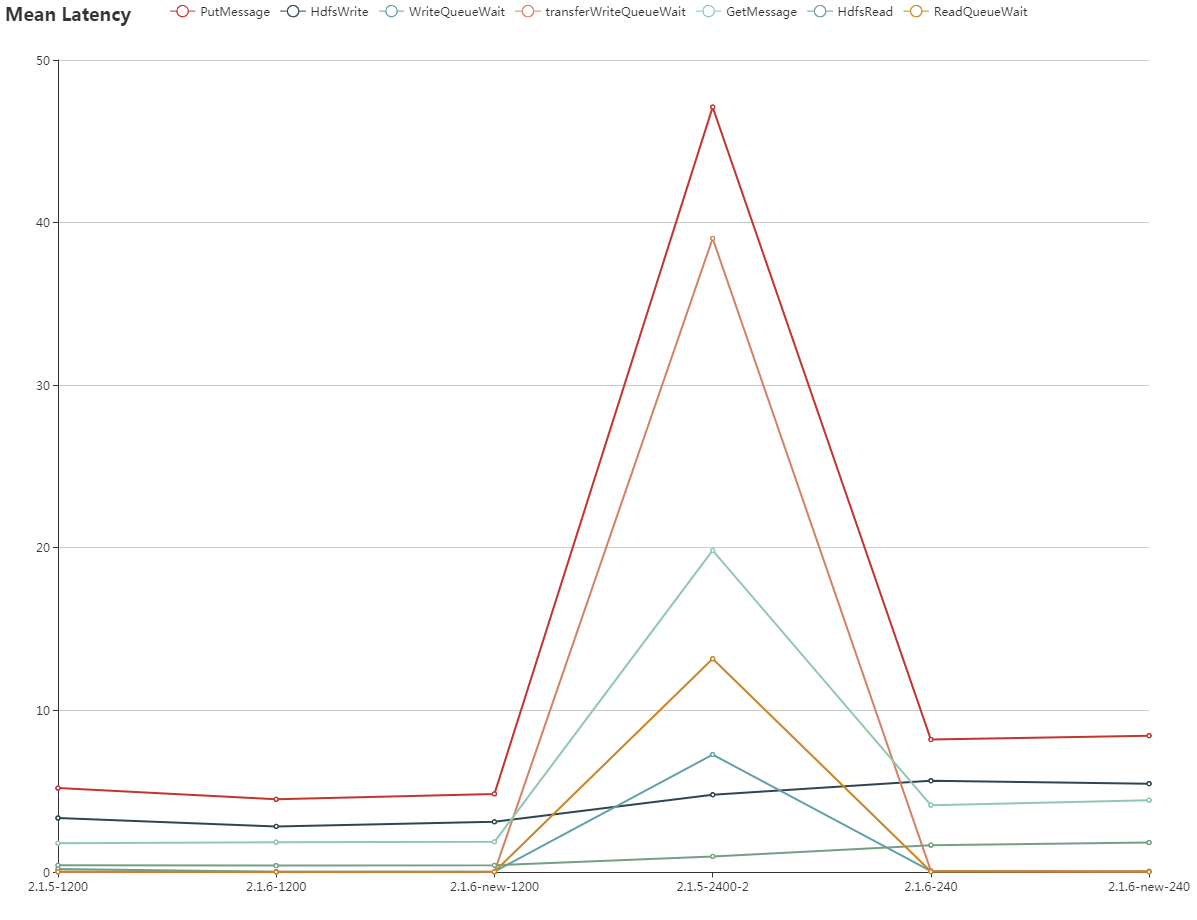

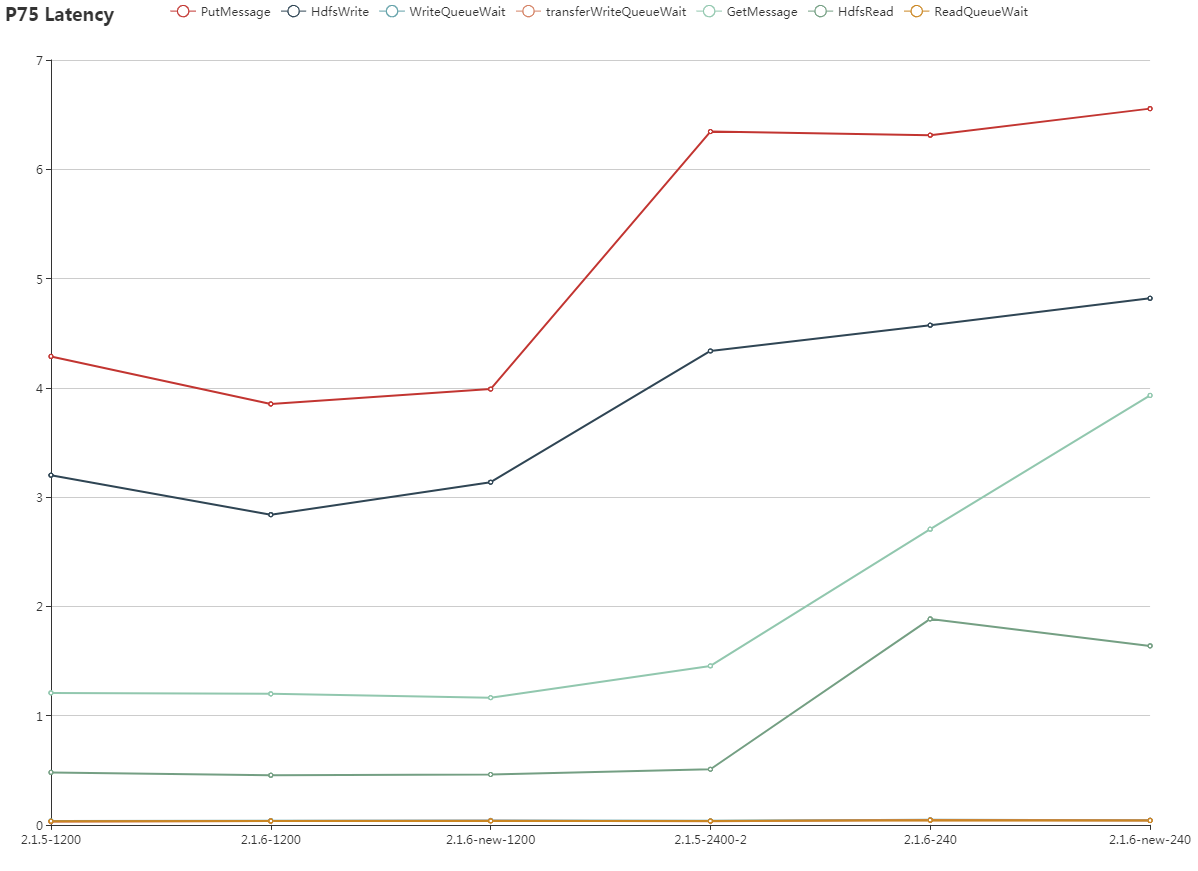

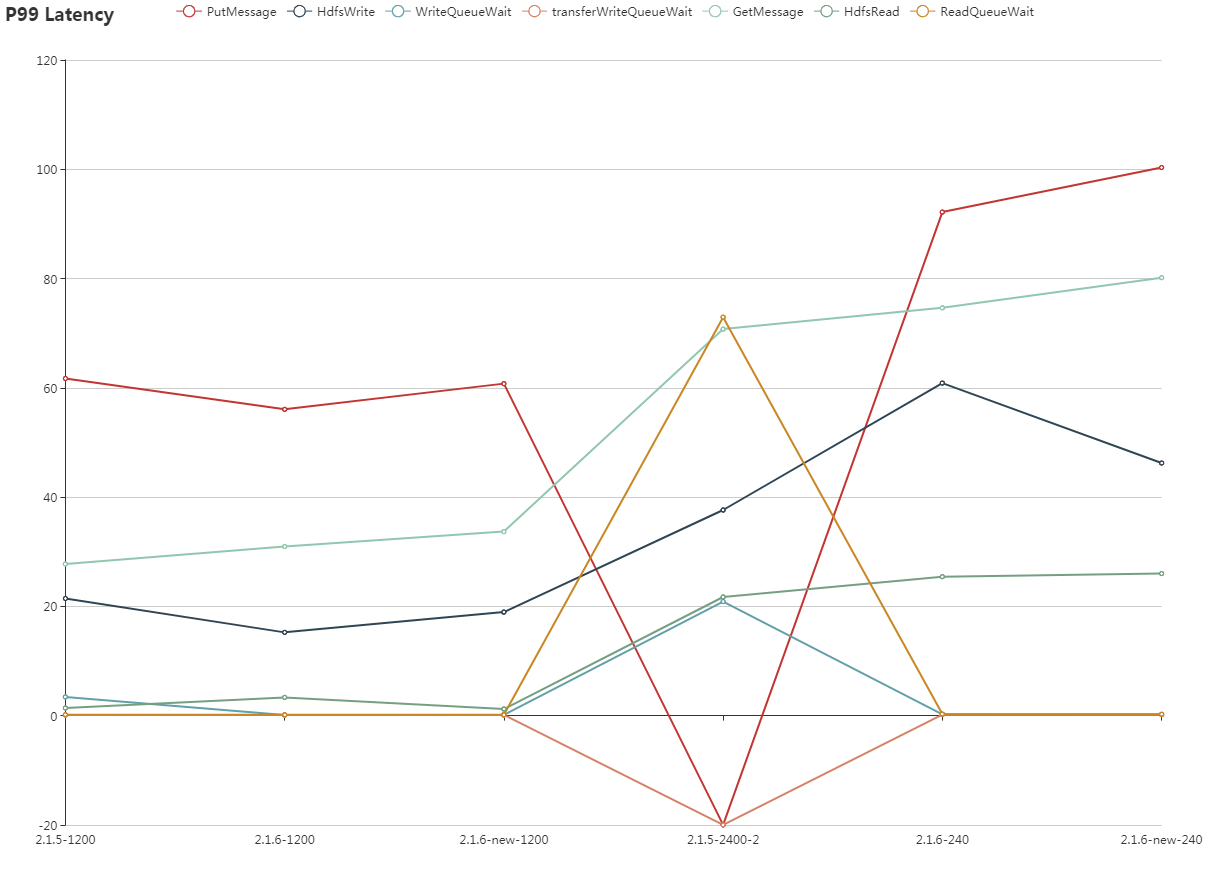

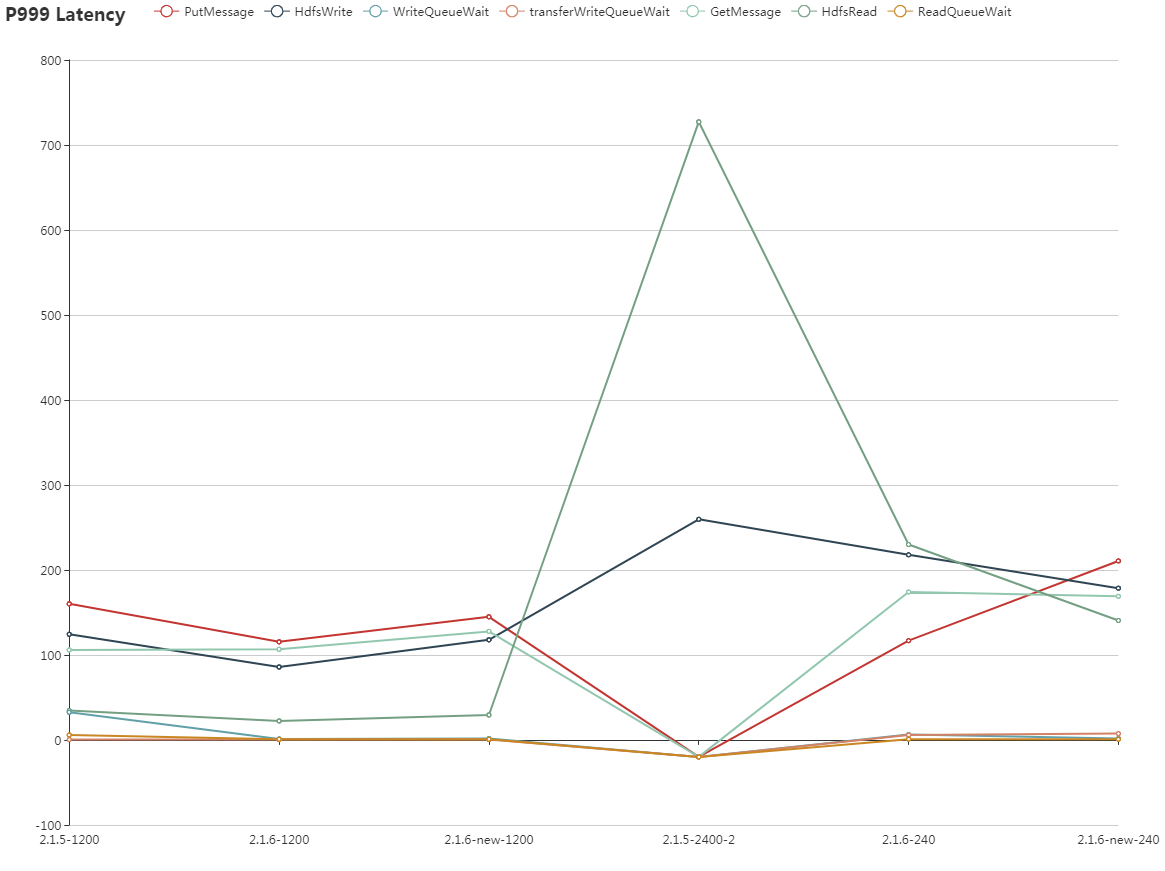

We used version 2.1.5, version 2.1.6, version 2.1.6 (enhanced), then carried out separate comparative tests under the conditions of having one client end and two client ends; We separately used 2.1.5-1200, 2.1.6-1200, 2.1.6-new-1200, 2.1.5-2400, 2.1.6-2400, 2.1.6-new-2400 to express the test case.

Related Parameters

2.1.5: Numbers of putMessage, getMessage, transferPutMessage, and TransferGetMessage threads are all 200, the data limit arriving at a single machine client is 100; number of Jetty acceptor threads is 10, worker threads is 100.

2.1.6: Numbers of putMessage and getMessage threads are 200, transferPutMessage and transferGetMessage threads are 50. Since we have 4 machines, each process has a total of 150 threads, and there are 50 threads arriving at each single machine client; number of Jetty acceptor threads is 10, worker threads is 100.

2.1.6-new: Identical to 2.1.6, except that the number of Jetty worker threads is 300.

Statistical Data

Data Analysis

In a scenario where there are 1200 partitions

Regarding the delay: 2.1.6 < 2.1.6-new < 2.1.5

Regarding the queue wait time: 2.1.6 < 2.1.6-new << 2.1.5

With regards to the two improvements mentioned above, they're similar to situation 1, only the delay has a slight increase, but with regards to the queue wait time, in both the P99 and P999 dimensions there is a very obvious improvement, primarily because the cluster pressure is smaller.

As for how the capacities of 2.1.6-new differ from 2.1.6, mainly, the cluster pressure is not high, and an excessive number of threads can lead to a certain amount of waste.

In a scenario where there are 2400 partitions

Regarding the delay: 2.1.6 < 2.1.6-new << 2.1.5

Regarding the queue wait time: 2.1.6 < 2.1.6-new << 2.1.5

Special explanation:

2.1.5-2400-2: The complete test time for version 2.1.5 was 70 minutes. During the first 35 minutes the various statistical data was quite large, the client end timeouts were very serious, and the cluster was in an unusable state. During the second 35 minutes the situation improved, but the latency issues persisted; therefore a few statistics are displayed as "-20" in the image, to indicate that the value's initial value was extremely large, and for the purpose of the image, it is marked as a negative number.

The problem from the previous tests where the HDFS operation took up a low proportion of the entire read/write operation, did not reoccur in this test.

Summary

When pressure is increased by 100%, the new version can successfully complete predetermined objectives, and does not encounter the service unavailable status; at the same time, due to optimization of the thread queue model, the long tail phenomenon when processing tasks from the thread pool has greatly improved, in accordance with our expectations.