Talos Test Scenarios and Results

Related Changes

| Commit | Revision | Change |

|---|---|---|

| c0fc9f8cac7d923d1a06a7235d21e54919d3d42a | D66598 | Increased priority queue support. |

| ee3d9d614fd36e5ea07460228c670f40e434dbf4 | D66823 | Amended the forwarding model so that each machine uses its own thread pool and Thrift client. |

| 5e068156aefda275c926b90c50f8df0cfebec7f9 | D67054 | Increased the expiration timestamp for read/write requests, immediately return them from the Talos queue when the time limit is surpassed. |

| 4cde032af7c9d3f698fc2072cf37dfa38adc2932 | D67338 | When RestServer is in DelayRemoved state, immediately return PartitionNotServe. |

Machine Environment

- 2 clients, 4 Talos machines, 4 Hdfs, 4 HBase, Talos and Hdfs/HBase distributed, machine models are all 2U;

- Read/write is synchronous, each partition corresponds to one thread;

Test Schema

There are two client machines, each client creates its own topic, each topic uses 120 partitions. Each client end starts 240 threads, of these, 120 threads simultaneously putMessage, the remaining 120 threads simultaneously getMessage; for putMessage the size of each packet is 10K, in addition, there are some other fields that may cause the length to increase. As for each read or written thread, they all use a synchronous model, moreover after each request is returned, they immediately send the next request.

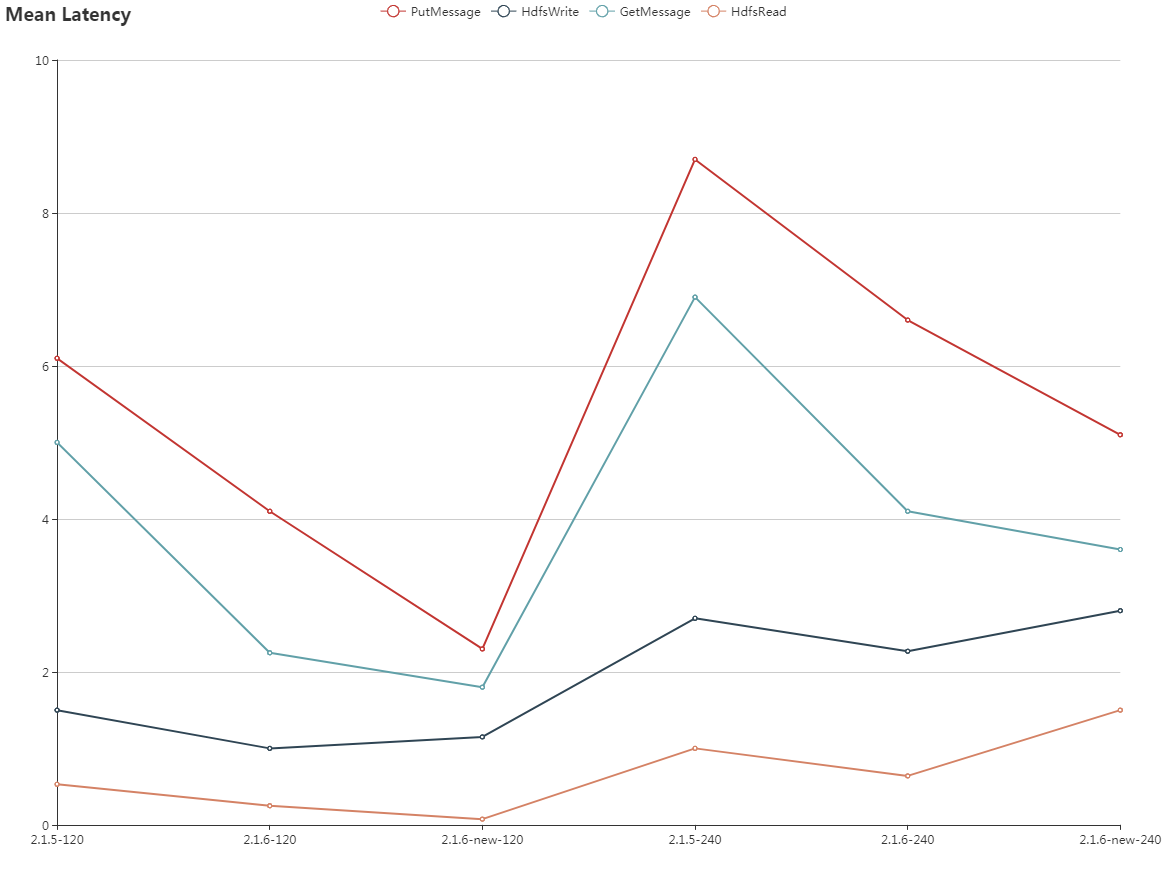

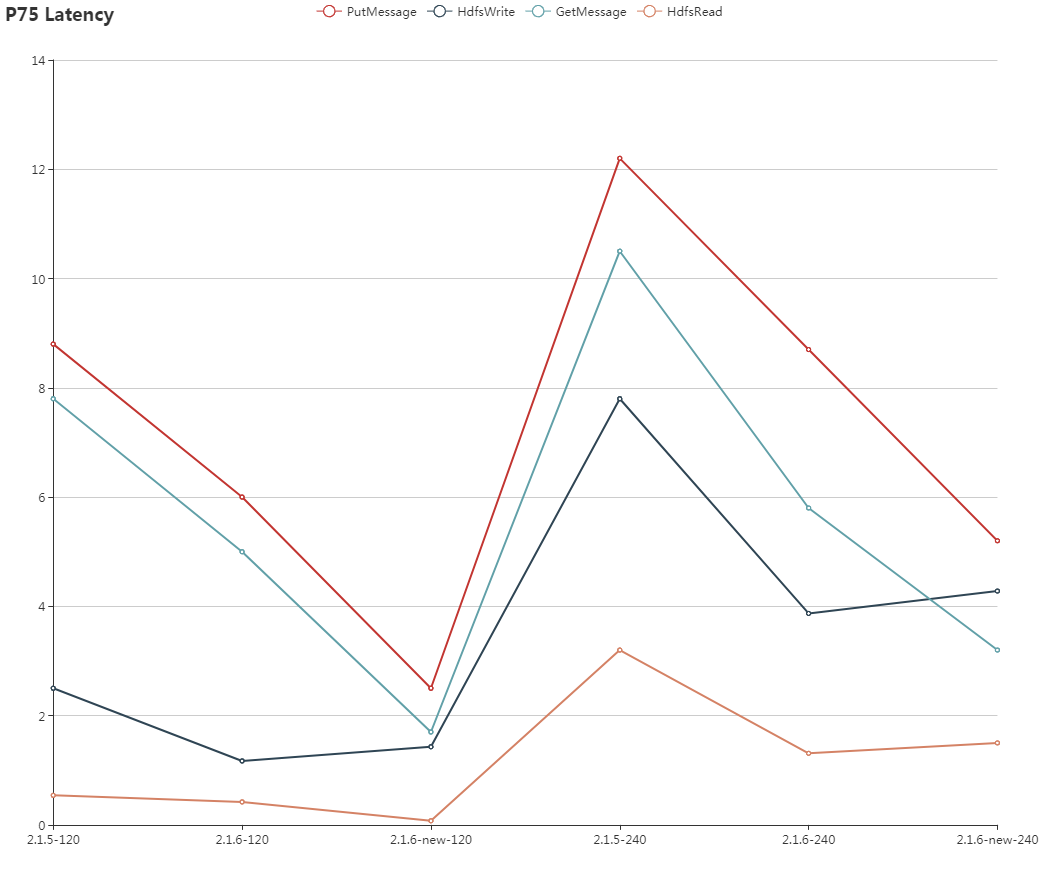

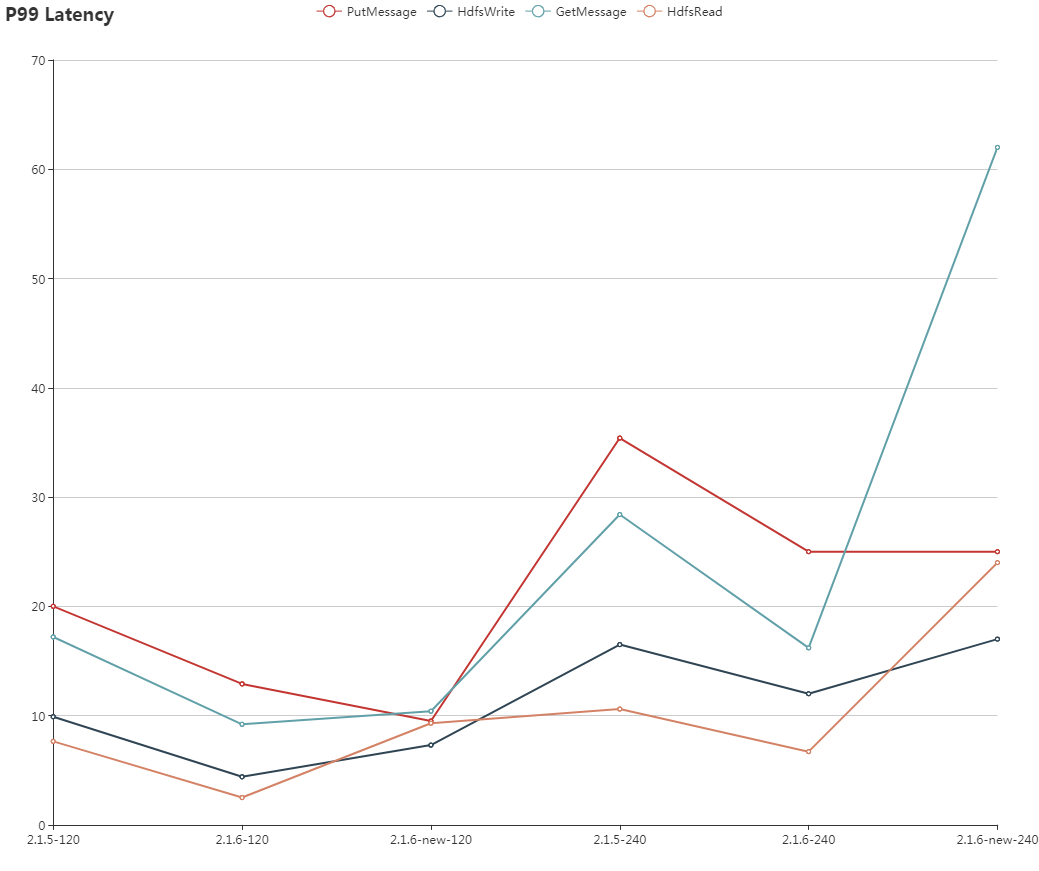

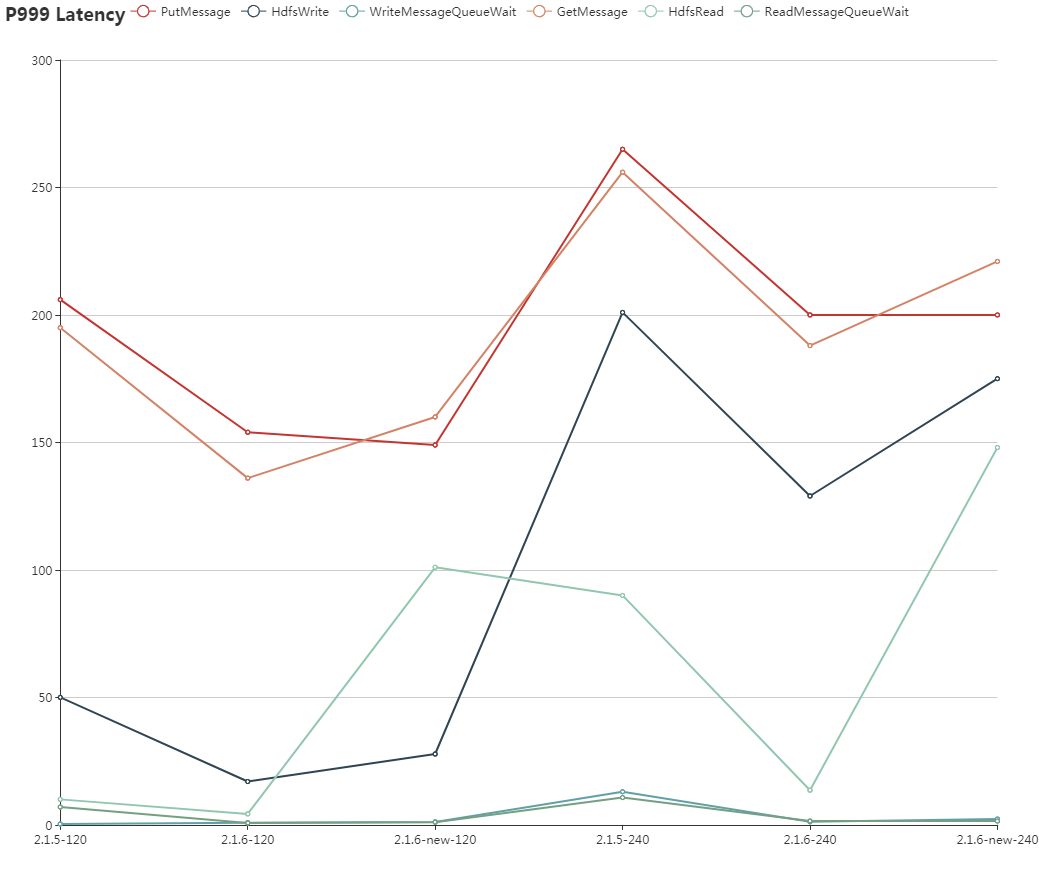

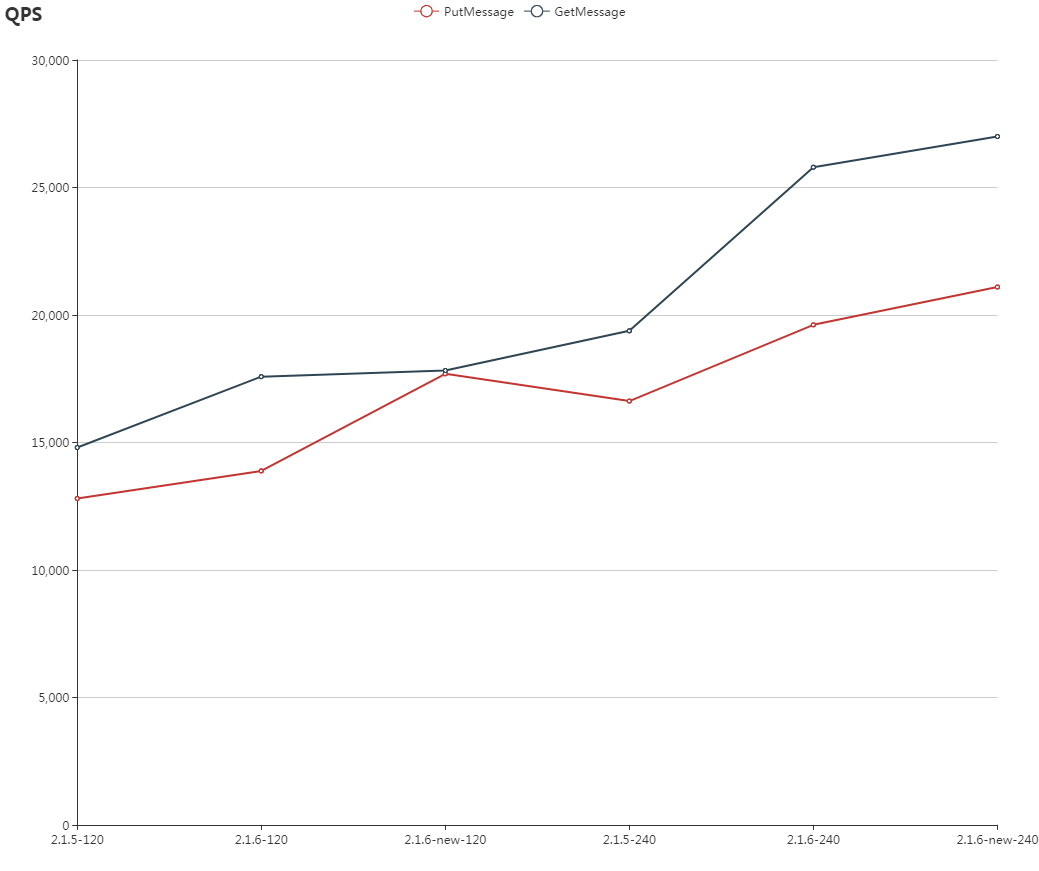

We used version 2.1.5, version 2.1.6, version 2.1.6 (enhanced), then carried out separate comparative tests under the conditions of having one client end and two client ends. We separately used 2.1.5-120, 2.1.6-120, 2.1.6-new-120, 2.1.5-240, 2.1.6-240, 2.1.6-new-240 to represent the test case.

Related Parameters

2.1.5: Numbers of putMessage, getMessage, transferPutMessage, and TransferGetMessage threads are all 200, the data limit arriving at a single machine client is 100; number of Jetty acceptor threads is 10, worker threads is 100.

2.1.6: Numbers of putMessage and getMessage threads are 200, transferPutMessage and transferGetMessage threads are 50. Since we have 4 machines, each process has a total of 150 threads, and there are 50 threads arriving at each single machine client; number of Jetty acceptor threads is 10, worker threads is 100.

2.1.6-new: Identical to 2.1.6, except that the number of Jetty worker threads is 300.

Statistical Data

Data Analysis

Comparison of Versions 2.1.5 ~ 2.1.6

1. QPS rises more than 10%, Latency in different dimensions drops more than 30%, at the same time, direct read/write HDFS Latency also drops 30% ~ 50%.

The aforementioned performance improvement is primarily due to the optimization of the thread pool model. Version 2.1.5's thread is used as the model: tasks are executed according to a model that takes its key (TopicAndPartition) and obtains a hash to select a thread, this way, it's possible for a large number of threads to be called. On the other hand, in version 2.1.6's thread model, threads can be selected through two methods, according to task data or key hash. When a task has a thread that executes its corresponding key, it directly selects this thread, otherwise it selects the thread with the smallest number of waiting tasks, if all of the threads have the same number of waiting tasks, then it selects the thread with the smallest thread ID. The biggest difference between the two schema lies in the grouping of threads for task execution, in version 2.1.5 all threads are grouped, in version 2.1.6's schema it's basically a very small number of threads that are grouped. When, due to the kernel scheduler time slice, there's basically an identical number of tasks, if they are distributed to more thread groups, then the time slice cycle can cause the wait to increase. Therefore 2.1.6 can have even better results; for example, in 2.1.6-120, putMessage's average delay is 4.1ms, and every second 13884 putMessage requests are executed, then in theory the number of threads needed is 13885/(1000/4.1) = 57 threads, and the average number of threads needed by each machine is a little more than 14. Note: the cost of analyzing the above hypothesis is relatively high. The threads' waiting tasks' data distribution has only been tested in version 2.1.6, where we discovered that the vast majority of threads had 0 waiting tasks, but if we want to verify the aforementioned hypothesis, we must perform additional tests: for example using 2.1.5's schema to see the influence the number of threads has on latency, using 2.1.6 schema to see the distribution of task execution thread IDs, etc.

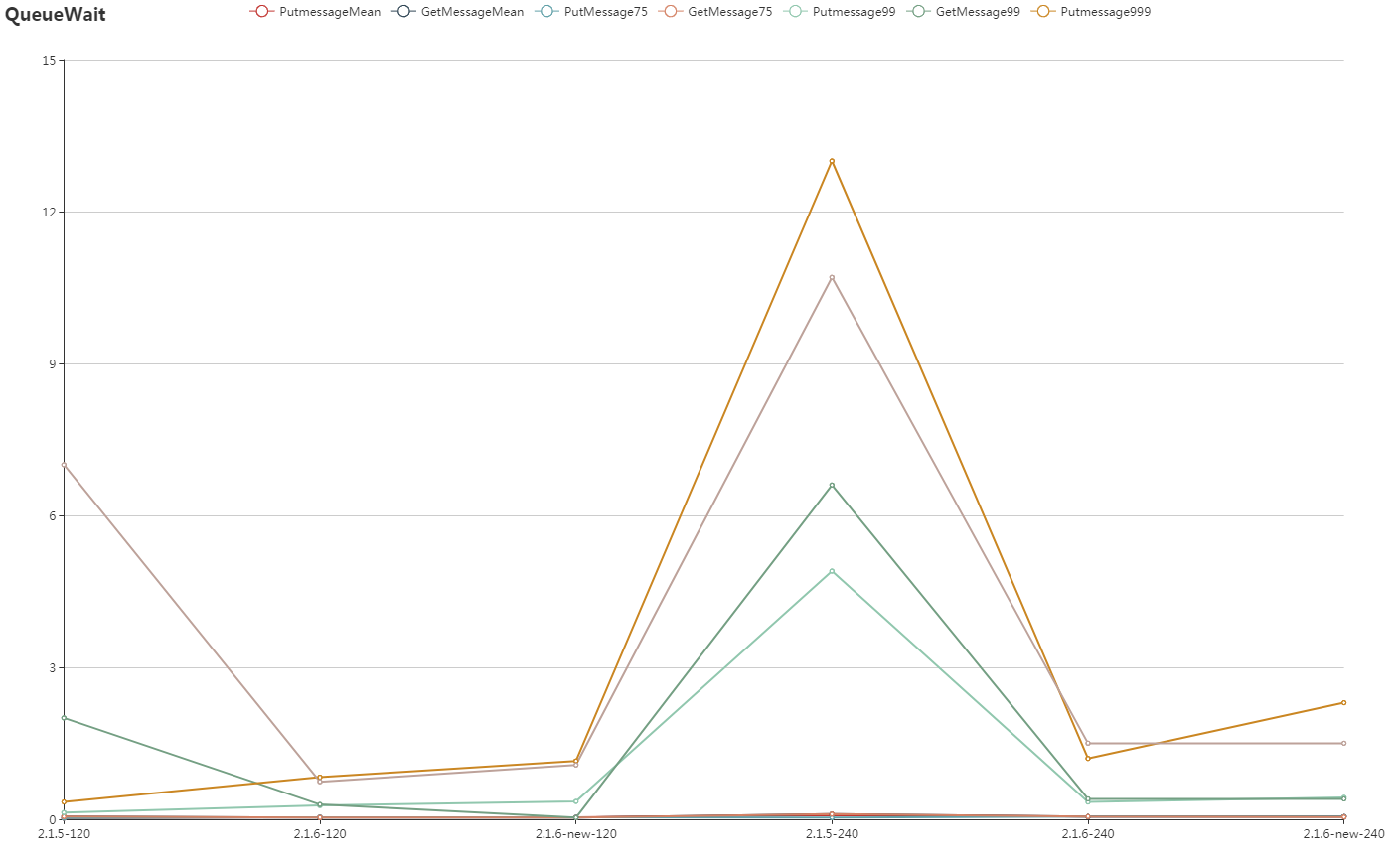

2. The wait time's long tail is greatly reduced

This item is mainly due to thread model optimization, version 2.1.5 selects threads according to the hash, and can create a problem where it tries to use a thread even when that thread is busy, and then the long tail is relatively long; as for the problem's effect on the new version, under average values and P75 conditions, the wait time increases slightly, but the absolute data is very small, therefore we can ignore its effect.

3. Version 2.1.6's runtime exceptions

In version 2.1.6, of the entire PutMessage and GetMessage delay, only about 25% is the HdfsWrite and HdfsRead delay, this is very nonsensical. Talos RestServer itself uses simple logic, if we create some content related to authentication and certification, after that the data and hdfs will interact.

Comparison of Versions 2.1.6 ~ 2.1.6

Put/getMessage qps increased more than 10%, put/getMessageLatency P75 decreased 80%~150%, at the same time, HDFS duration takes up more than 60% of the total time; there's no clear influence on the combined delay of P99 and P999, the proportion used by HDFS increased.

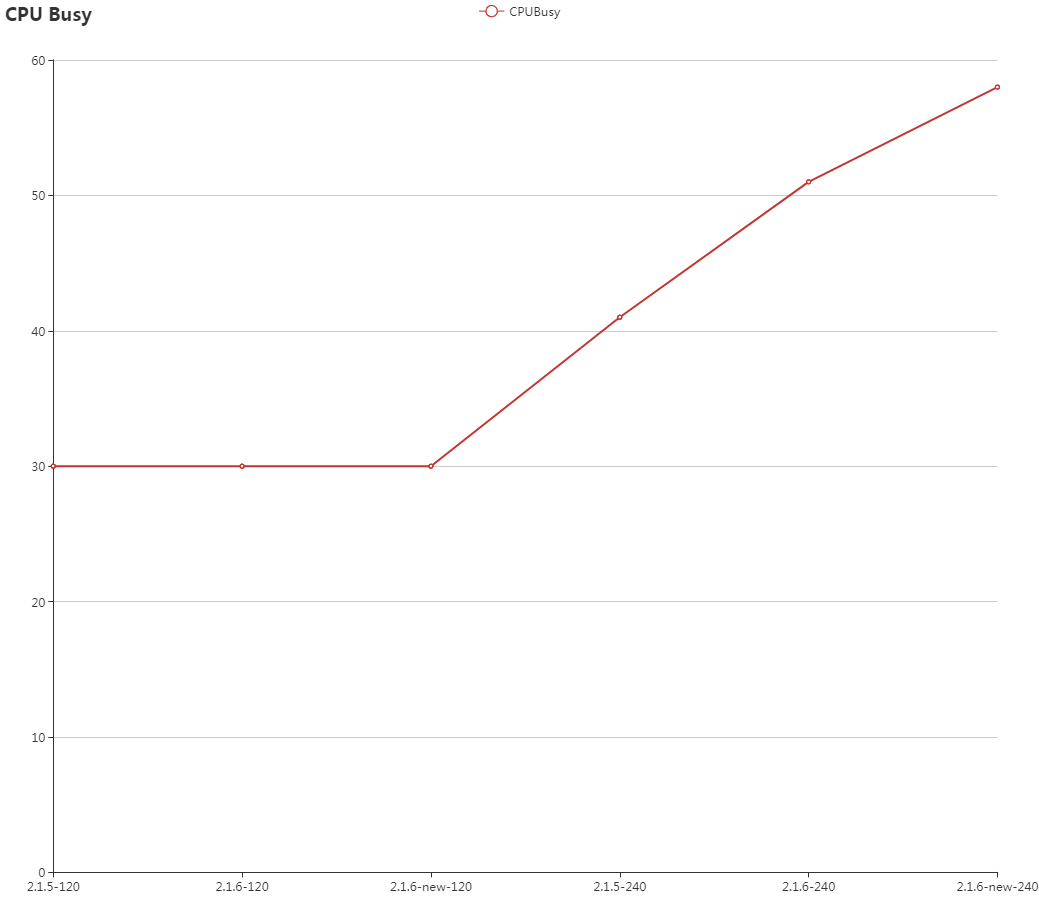

The capacity increased primarily because of the increase in the number of jetty worker threads, primarily showing an enormous reduction in the time spent between the start of executing Auth until actually allocating the PutMessage/GetMessage. Having analyzed CPU Busy we can see that, as the threads increase, CPU Busy also improves to a certain degree, therefore stuttering and latency are also reduced.